As semiconductor devices shrink to dimensions that defy classical intuition, engineers find themselves in unfamiliar territory. Pushing below the 5-nanometer threshold means working in a regime where randomness plays a more powerful role than design intention, and tools become less deterministic with each generation. Erik Hosler, a consultant and former EUV lithography specialist, reflected on this uncertainty in recent discussions at the SPIE lithography conference, where the tone has shifted from confident scaling strategies to open-ended exploration.

The search for nano-scale solutions has never been more urgent or uncertain. Engineers contend with physical phenomena that were once negligible but now dominate device performance and reliability. Traditional models and fabrication processes are being evaluated to their limits. The path forward is not clearly marked, and in many ways, it resembles groping in the dark, searching not only for new materials and tools but for entirely new frameworks of understanding.

Stochastic Challenges at the Atomic Scale

One of the defining hurdles of modern semiconductor manufacturing is stochastic variability. At nanometer scales, even small statistical fluctuations in photon exposure, resist chemistry, or etching behavior can cause defects that impact yield. These errors are not always predictable or repeatable, which makes them far more difficult to isolate and correct.

In extreme ultraviolet lithography, shot noise from the limited number of photons per feature can create uneven energy absorption in the photoresist. It leads to line edge roughness, missing contacts, or bridging defects that do not follow any clear patterns. Engineers must deal with this uncertainty using statistical methods, increased exposure doses, or process redundancy.

But as features approach 2 nanometers below, even increasing the dose cannot eliminate randomness. There are physical limits to how much energy materials can absorb or tolerate before degrading. The shrinking margin for error forces fabs to experiment with innovative solutions, often without knowing whether success is even possible.

The Expanding Role of Advanced Patterning

To combat this growing complexity, engineers are expanding their toolkits well beyond traditional lithography. They are evaluating novel patterning approaches, reevaluating resist materials, and incorporating computational modeling at every stage of the design of the manufacturing pipeline. Erik Hosler emphasizes, “We are looking at just about everything in advanced patterning.”

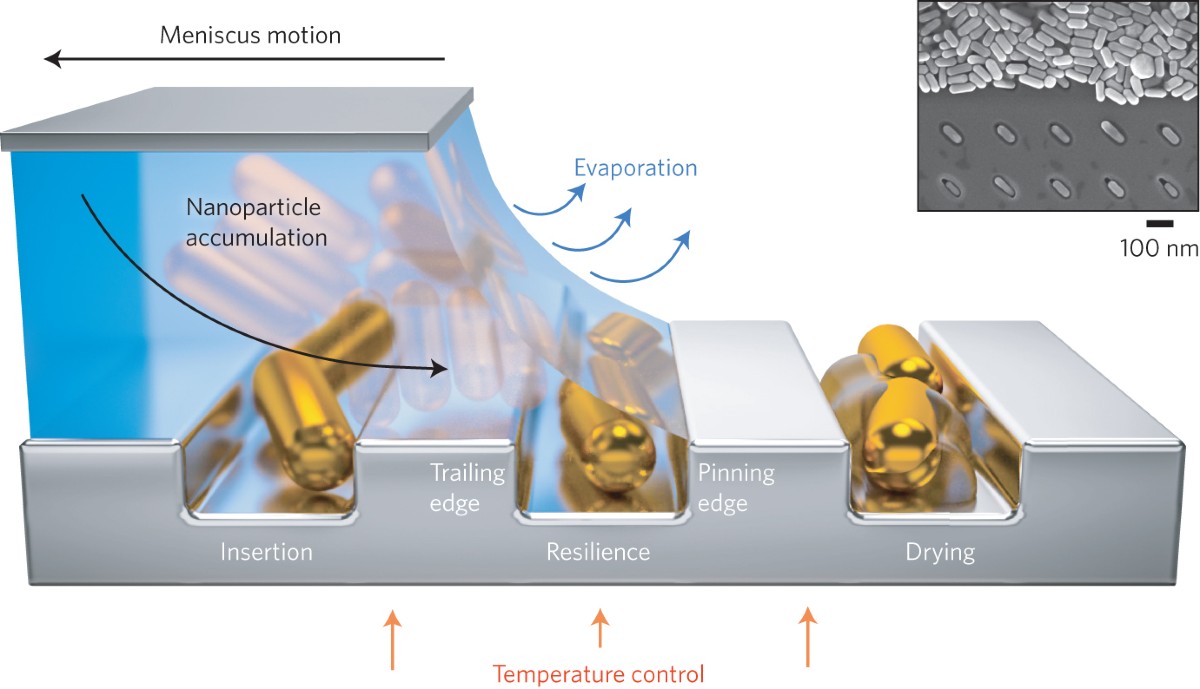

It underscores the sheer breadth of approaches now under consideration. High numerical aperture EUV systems are being developed to push resolution further, though they come with optical and overlay challenges. Meanwhile, directed self-assembly, nanoimprint lithography, and multi-beam electron beam systems are being explored for their potential to fill specific roles or complement existing tools.

None of these methods alone offers a silver bullet. Rather, they represent a growing mosaic of solutions. Some will find their way into high-volume production, while others will remain in research labs. But all are part of the broader search for clarity in an increasingly unpredictable space.

Metrology’s Visibility Problem

If engineers are groping in the dark, then metrology is their flashlight. Unfortunately, that flashlight is not always bright enough to see what is going wrong.

Traditional inspection systems struggle to resolve features at sub-nanometer dimensions. As a result, fabs are investing in hybrid metrology platforms that combine optical inspection, scanning electron microscopy, and computational reconstruction. These tools aim not just to measure features but also to anticipate variability and catch potential yield killers before they propagate through the process.

However, no inspection method today can claim full accuracy at the scales that advanced logic nodes demand. Engineers are learning to rely more on consistency and statistical modeling than on absolute measurements. As one metrology expert at SPIE pointed out, “We focus on repeatability because precision alone is no longer feasible.”

This shift is changing how quality control and yield learning are conducted. Instead of identifying every defect, FABs aim to build resilient systems that can tolerate and recover from small imperfections.

Quantum and Ultrafast Characterization

The movement toward a better understanding of stochastic events has also led engineers to embrace techniques from ultrafast science and quantum physics. Characterizing how photons interact with resist materials on femtosecond and attosecond timescales is now a serious area of research.

Facilities like Imec’s AttoLab are using high-energy laser systems to simulate and observe these interactions in extreme detail. The idea is to watch chemistry unfold in real time, revealing how electrons move, bonds break, and energy spreads through complex molecular structures.

These insights could guide the development of new resist platforms with more stable and predictable behavior under EUV exposure. While still largely academic, this research is one of the few paths available for understanding what happens in the moments that define a successful or failed lithography process.

Design Adaptation and Functional Resilience

Another way engineers are adapting to increasing uncertainty is by rethinking chip architecture itself. As process variability rises, designers are building tolerance and redundancy to ensure that small defects do not ruin the entire device.

For example, error correction circuits, redundant vias, and statistical layout methods are now common in advanced logic designs. There is also growing interest in defect-aware computing, where chips are designed to detect and route damaged elements during runtime.

It marks a significant shift in how chips are conceptualized. Rather than aiming for perfect fabrication, engineers are increasingly focused on resilient performance. They are designed with failure in mind—not as an anomaly but as a statistical inevitability.

Interdisciplinary Collaboration as a Necessity

Because the challenges of nano-scale manufacturing span physics, chemistry, materials science, and computation, no group can address them in isolation. The push toward solutions is driving deeper collaboration across traditional disciplinary lines.

Materials scientists must work closely with process engineers to understand how a change in composition affects etch behavior. Optical physicists must coordinate with layout engineers to make sure designs can be printed. Computational scientists are needed to model interactions that cannot yet be measured directly.

This type of collaborative work was once considered optional or experimental. Today, it is essential. Without it, industry cannot hope to build reliable products with atomic-scale precision.

Feeling Forward into the Unknown

Engineers share an understanding that they are navigating uncertainty, guided by experience, experimentation, and a growing set of sophisticated tools. But there is no fixed map. Each new node requires exploration, iteration, and a willingness to question assumptions.

At nano-scale dimensions, intuition alone is not enough. Engineers must rely on simulation, cross-functional learning, and collective insights gathered from conferences, labs, and foundries around the world. It is not just about inventing new tools. It is about building new ways to think about patterning, yield, and reliability.

They are, really, groping in the dark, but they are not lost. With every experiment and shared result, the industry inches forward, gaining clarity and uncovering paths to progress that were previously hidden. It is a process of trial, reflection, and resilience, and it is how innovation survives when visibility is at its lowest.